With any new product there can be some confusion over the exact range and scope of features, this is just as true for VS2010 Lab Management as any other. In fact given the number of moving parts (infrastructure you need in place to get it running) it can be more confusing than average. In this post I will cover the questions I have seen most often.

What does ‘Network Isolation’ really mean?

The biggest confusion I have seen is that Lab Management allows you to run a number of copies of a given test environment, each instance of an environment is ‘network isolated’ from the others. This means that each instance of the environment can have server VMs named the same without errors being generated. WHAT IS DOES NOT MEAN is that each of these environments are fully isolated from your corporate or test LAN. Think about it, how could this work? I am sad to say it there is still no shipment date for Microsoft Magic Pixie Net (MMPN), until this is available then we will still need a logical connection to any virtual machine under test else we cannot control/monitor it.

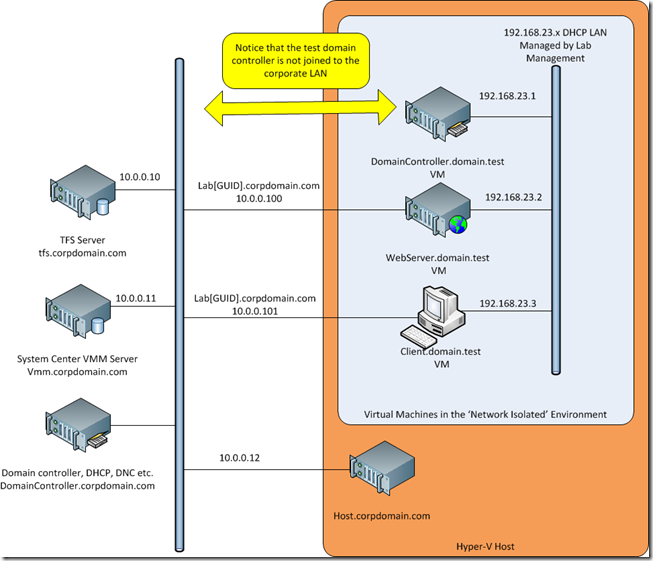

So what does ‘Network Isolation’ actually mean? Well it basically means Lab Manager will add a second network card to each VM in your environment (with the exception of domain controllers, I will come back to that). These secondary connections are the way you usually manage the VMs in the environment, so you end up with something like the following

Lab Manager creates the 192.168.23.x virtual LAN which all the VMs in the environment connect to. If you want to change the IP address range this is set in the TFS administration console, but I suspect needing to change this will be rare.

If the PCs in your environment are in a workgroup the is no more to do, but if you have a domain within your environment (i.e. you included a test domain controller VM in your environment, as shown above) you also need to tell the Lab Management environment which server is the domain controller. THIS IS VERY IMPORTANT. This is done in the Visual Studio 2010 Test Manager application where you setup the environment.

When all this is done and the environment is deployed, a second LAN card is added to all VMs in the environment (with the exception of the domain controller you told it about, if present). These LAN cards are connected to the corporate LAN and an IP address is provided using your corporate LAN DHCP server and assign a name in the form something like LAB[Guid].corpdomain.com (you can alter this domain name to something like LAB[Guid].test.corpdomain.com if you want in TFS administration console). This second LAN is a special connection in that the VMs NETBIOS names are not broadcast over it onto the corporate LAN, thus allowing multiple copies of the ‘network isolated’ to be run. In each case each VM will have a unique name on the corporate LAN, but the original names within the test (192.168.x.x) environment.

Other than blocking NETBIOS, the ‘special connection’ is in no other way restricted. So any of the test VMs can use their own connection to the corporate LAN to access any corporate (or internet resources) such as patch update servers. The only requirement will be to login to the corporate domain if authentication is required, remember on the test environment you will be logged into the test domain or local workgroup.

I mentioned that the test domain controller is not connected to the corporate LAN. This is to make sure corporate users don’t try to authenticate against it by mistake and to stop different copies of the test domain controller trying to sync.

All clear? So ‘network isolated’ does not mean fully isolated, but the ability to have multiple copies of the same environment running at the same time with the magic done behind the scenes auto-magically by Lab Management. Maybe not the best piece of feature naming in the world!

So how does a tester actually connect to the test VMs from their PC?

Well obviously they don’t use a magic MMPN connection, there has to be a valid logical connection. There are actually two possible answers here; I suspect the most common will be via remote desktop straight to the guest test VMs, this will be via the LAB[Guid].corpdomain.com name. You might be thinking how do I know these IDs, well you can get them from the Test Manager application looking at VMs system info in any running environment. Because you can look them up in this way, a tester can either use the Windows RDP application itself or more probably just connect to the VMs from within Test Manager where it will use RDP behind the scenes.

The other option is to use what is called a host connection. This is when Test Manager connects to the test VMs via the Hyper-V host. For this to work the tester needs suitable Hyper-V rights and the correct tools on their local PC, not just the Test Manager. This could also be achieved using the Hyper-V manager or SCVMM console. Host mode is the way you would use to connect to a test domain controller that has no direct connection to a corporate LAN.

The choice of connection and tool will depend on what the tester is trying to do. I would expect Test Manager to be the tool of choice in most cases.

Do I need Network Isolation – is there another option?

This all depends on what you want to do, there are good description of the possible architectures in Lab Management documentation. If you don’t think ‘network isolation’ as described above is right for you the only other option that can provide similar environment separation is to not run them ‘network isolate’ but to provided the environment with a single explicit connection to the corporate LAN via a firewall such as TMG allow there connection.

This goes without saying is more complex than using the standard ‘network isolated’ model built into Lab Management, so make sure it is really worth the effort before starting down this route.

What agents do I need to install?

There are a number of agents involved in Lab Management, these allow network isolation management, deployment and testing. The ones you need depend on what you are trying to do. If you want all the feature, not unsurprisingly you need them all. If this is what you want to do then use the VMPrep tool, it makes life easier. If you don’t want it all (and it might be easier to just install all of them as standard) you can choose.

If you want to gather test data you need the test agent, and you want to deploy code you need the lab workflow agent. The less obvious one is that for ‘network isolation’ you need the Lab Agent installed, it is though this agent that network isolation LAN is configured.

Any other limitations I might have missed?

The most obvious is that many companies will use failover clustering and a SAN to make a resilient Hyper-V cluster. Unfortunately technology his is not currently supported by Lab Management. This is easy to miss as it is only referred to once in the documentation to my knowledge in an FAQ section.

The effect of this is to not allow shared SAN storage between any Hyper-V hosts or more importantly between the VMM Library and the Hyper-V hosts. This means that all deployment of environments has to be over the LAN, the faster SAN to SAN operations cannot be used as these need clustering.

I suppose there is also the limitation of no clustering that you cannot hot migrate environments around between Hyper-V hosts, but I don’t see this as much of an issue, these are meant to be lab test environments, not live production high resilience VMs.

This is a good reason to make sure that you separate you production Hyper-V hosts from your test ones,. Make the production servers a failover cluster and the test one just a hosts group. Let Lab Manager work out which server in the host group (assuming there is more than one) to place the environment on.

So I hope that helps a bit. I am sure I will find more common question, I will post about them as they emerge.