[Updated 10 Nov 2010: Also see [More] Fun with WCF, SharePoint and Kerberos]

I have been battling some WCF authentication problems for a while now; I have been migrating our internal support desk call tracking system so that it runs as webpart hosted inside Sharepoint 2010 and uses WCF to access the backend services all using AD authentication. This means both our staff and customers can use a single sign on for all SharePoint and support desk operations. This replaced our older architecture using forms authentication and an complex mix of WCF and ASMX webservices that have grown up over time; this call tracking system started as an Access DB with a VB6 front end well over 10 years ago!

As with most of our SharePoint development I try not work inside a SharePoint environment when developing, for this project this was easy as the webpart is hosted in SharePoint but makes no calls to any SharePoint artefacts. This meant I could host the webpart within a test .ASPX web page for my development without the need to mock out SharePoint. This I did, refactoring my old collection of web services to the new WCF AD secured based architecture.

So at the end of this refactoring I thought I had a working webpart, but when I deployed it to our SharePoint 2010 farm it did not work. If I checked my logs I saw I had WCF authentication errors. The webpart programmatically created WCF bindings, worked in my test harness, but failed when in production.

A bit of reading soon showed the problem lay in the Kerberos double hop issues, and this is where the fun began. In this post I have tried to detail the solution not all the dead ends I went down to get there. The problem is that for this type of issue there is one valid solution, and millions of incorrect ones, and the diagnostic options are few and far between.

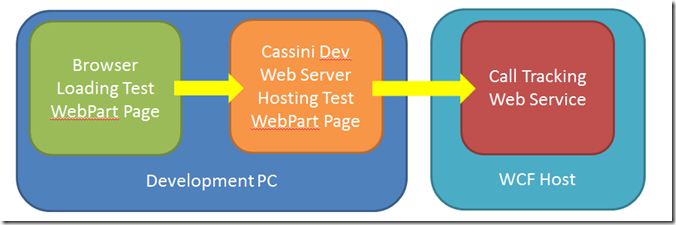

So you may be asking what is the kerberos double hop issue? Well a look at my test setup shows the problem.

[It is worth at this point getting an understanding of Kerberos, The Teched session ‘Kerberos with Mark Minasi’ is good primer]

The problem with this test setup is that the browser and the webserver, that hosts the test webpage (and hence webpart), are on the same box and running under the same account. Hence have full access to the credentials and so can pass them onto the WCF host, so no double hop.

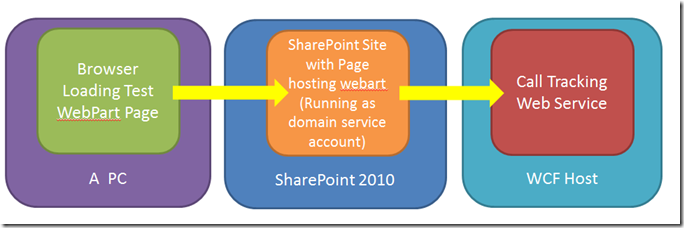

However when we look at the production SharePoint architecture

We see that we do have a double hope. The PC (browser) passes credentials to the SharePoint server. This needs to be able to pass them onto the WCF hosted services so it can use them to access data for the original client account (the one logged into the PC), but by default this is not allowed. This is a classic Kerberos double hop. The SharePoint server must be setup such that is allow to delegate the Kerberos tickets to the next host, and the WCF host must be setup to accept the Kerberos ticket.

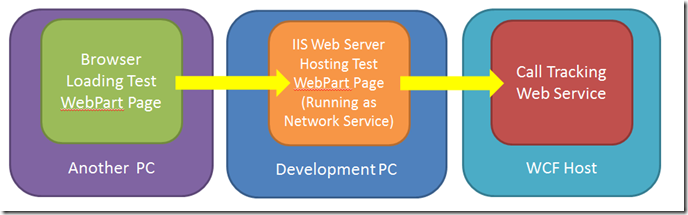

Frankly we fiddled for ages trying to sort this in SharePoint, but was getting nowhere. The key step for me was to modify my test harness so I could get the same issues outside SharePoint. As with all technical problems the answer is usually to create a simpler model that can exhibit the same problem. The main features of this change being that I had to have three boxes and needed to be running the web pages inside a web server I could control the account it was running as i.e. not Visual Studio’s default Cassini development web server.

So I built this system

Using this model I could get the same errors inside and outside of the SharePoint. I could then build up to a solution step by step. It is worth noting that I found the best debugging option was to run DebugView on the middle Development PC hosting the IIS server. This showed all the logging information from my webpart, I saw no errors on the WCF host as the failure was at the WCF authentication level, well before my code was accessed.

Next I started from the WCF kerberos sample on Marbie’s blog. I modified the programmatic binding in the webpart to match this sample

var callServiceBinding = new WSHttpBinding();

callServiceBinding.Security.Mode = SecurityMode.Message;

callServiceBinding.Security.Message.ClientCredentialType = MessageCredentialType.Windows;

callServiceBinding.Security.Message.NegotiateServiceCredential = false;

callServiceBinding.Security.Message.EstablishSecurityContext = false;

```

```

callServiceBinding.MaxReceivedMessageSize = 2000000;

```

```

this.callServiceClient = new BlackMarble.Sabs.WcfWebParts.CallService.CallsServiceClient(

callServiceBinding,

new EndpointAddress(new Uri(“http://mywcfbox:8080/CallsService”)));

```

```

this.callServiceClient.ClientCredentials.Windows.AllowedImpersonationLevel = TokenImpersonationLevel.Impersonation;

```

I then created a new console application wrapper for my web service. This again used the programmatic binding from the sample.

```

static void Main(string\[\] args)

{

// create the service host

ServiceHost myServiceHost = new ServiceHost(typeof(CallsService));

// create the binding

var binding = new WSHttpBinding();

```

```

binding.Security.Mode = SecurityMode.Message;

binding.Security.Message.ClientCredentialType = MessageCredentialType.Windows;

// disable credential negotiation and establishment of the security context

binding.Security.Message.NegotiateServiceCredential = false;

binding.Security.Message.EstablishSecurityContext = false;

// Creata a URI for the endpoint address

Uri httpUri = new Uri("http://mywcfbox:8080/CallsService");

```

```

// Create the Endpoint Address with the SPN for the Identity

EndpointAddress ea = new EndpointAddress(httpUri,

EndpointIdentity.CreateSpnIdentity("HOST/mywcfbox.blackmarble.co.uk:8080"));

```

```

// Get the contract from the interface

ContractDescription contract = ContractDescription.GetContract(typeof(ICallsService));

// Create a new Service Endpoint

ServiceEndpoint se = new ServiceEndpoint(contract, binding, ea);

```

```

// Add the Service Endpoint to the service

myServiceHost.Description.Endpoints.Add(se);

// Open the service

myServiceHost.Open();

Console.WriteLine("Listening... " + myServiceHost.Description.Endpoints\[0\].ListenUri.ToString());

Console.ReadLine();

```

```

// Close the service

myServiceHost.Close();

}

```

I then needed to run the console server application on the WCF host. I had made sure the the console server was using the same ports as I had been using in IIS. Next I needed to run the server as a service account. I copied this server application to the WCF server I had been running my services within IIS on, obviously I stopped the IIS hosted site first to free up the IP port for my end point.

As [Marbie’s blog](http://marbie.wordpress.com/2008/05/30/kerberos-delegation-and-service-identity-in-wcf/) stated I needed run my server console application as a service account (Network Service or Local System), to do this I used the at command to schedule it starting, this is because you cannot login as either of these accounts and also cannot use runas as they have no passwords. So my start command was as below, where the time was a minute or two in the future.

> at 15:50 cmd /c c:tmpWCFServer.exe

To check the server was running I used task manager and netstat –a to make sure something was listening on the expect account and port, in my case local service and 8080. To stop the service I also used task manager.

I next need to register the SPN of the WCF end point. This was done with the command

> ```

> setspn -a HOST/mywcfbox.blackmarble.co.uk:8080 mywcfbox

> ```

Note that as the final parameter was mywcfbox (the server name). In effect I was saying that my service would run as a system service account (Network Service or Local System), which for me was fine. So what had this command done? It put an entry in the Active Directory to say that this host and this account are running an approved service.

Note: Do make sure you only declare a given SPN once, if you duplicate an SPN neither works, this is a by design security feature. You can check the SPN defined using

> setspn –l mywcfbox

I then tried to run load my test web page, but it still do not work. This was because the DevelopmentPC, hosting the web server, was not set to allow delegation. This is again set in the AD. To set It I:

1. connected to the Domain Server

2. selected ‘Manage users and computers in Active Directory’.

3. browsed to the computer name (DevelopmentPC) in the ‘Computers’ tree

4. right click to select ‘properties’

5. selected the ‘Delegation’ tab.

6. and set ‘Trust this computer for delegation to any service’.

I also made sure the the IIS server setting on the DevelopmentPC were set as follows, to make sure the credentials were captured and passed on.

[](/wp-content/uploads/sites/2/historic/image_59CEE4C3.png)

Once all this was done it all leap into life. I could load and use my test web page from a browser on either the DevelopmentPC itself or the other PC.

The next step was to put the programmatically declared WCF bindings into the IIS web server’s web.config, as I still wanted to host my web service in IIS. This gave me web.config servicemodel section of

```

<system.serviceModel\>

<bindings>

<wsHttpBinding\>

<binding name\="SabsBinding"\>

<security mode\="Message"\>

<message clientCredentialType\="Windows" negotiateServiceCredential\="false" establishSecurityContext\="false" />

</security\>

</binding\>

</wsHttpBinding\>

</bindings>

<services>

<service behaviorConfiguration\="BlackMarble.Sabs.WcfService.CallsServiceBehavior" name\="BlackMarble.Sabs.WcfService.CallsService"\>

<endpoint address\="" binding\="wsHttpBinding" contract\="BlackMarble.Sabs.WcfService.ICallsService" bindingConfiguration\="SabsBinding"\>

</endpoint\>

<endpoint address\="mex" binding\="mexHttpBinding" contract\="IMetadataExchange" />

</service\>

</services>

<behaviors>

<serviceBehaviors\>

<behavior name\="BlackMarble.Sabs.WcfService.CallsServiceBehavior"\>

<serviceMetadata httpGetEnabled\="true" />

<serviceDebug includeExceptionDetailInFaults\="true" />

<serviceAuthorization impersonateCallerForAllOperations\="true" />

</behavior\>

</serviceBehaviors\>

</behaviors>

</system.serviceModel\>

```

I then stopped the EXE based server, made sure I had the current service code on my IIS hosted version and restarted IIS, so my WCF web service was running as network service under IIS7 and .NET4. It still worked, so I now had an end to end solution using Kerberos. I knew both my server and client had valid configurations and in the format I wanted.

Next I upgraded my Sharepoint solution that it included the revised webpart code and tested again, and guess what, it did not work. So it was time to think was was different between my test harness and Sharepoint?

The basic SharePoint logical stack is as follows

[](/wp-content/uploads/sites/2/historic/image_3F2248AA.png)

The key was the account which the webpart was running under. In my test box the IIS server was running as Network Server, hence it was correct to set in the AD that delegation was allowed for the computer DevelopmentPC. On our Sharepoint farm we had allowed similar delegation for SharepointServer1 and SharepointServer2 (hence Network Service on these servers). However our webpart was not running under a Network Service account, but under a domain named account. It was this account blackmarblespapp that needed to be granted delegation rights in the AD.

Still this was not the end of it, all these changes need to be synchronised out to the various box, but after a repadmin on the domain controller an IISreset on both the SharePoint front end server it all started working.

So I have the solution was after, I can start to shut off all the old system I was using and more importantly I have a simpler stable model for future development. But what have I learnt? Well Kerberos is not as mind bending as it first appears, but you do need a good basic understanding of what is going on. Also that there are great tools like Klist to help look at Kerberos tickets, but for problems like this the issue is more a complete lack of ticket. The only solution is to build up you system step by step. Trust me you will learn more doing this way, there is no quick fix, and you learn far more than failure rather than success.